Microsoft Phi4 LLM is out on Ollama! This is a small open source model that gets really good benchmark scores - on the MMLU benchmark phi4 scores 84.8, nipping at the heals of GPT-4o's 88.1.

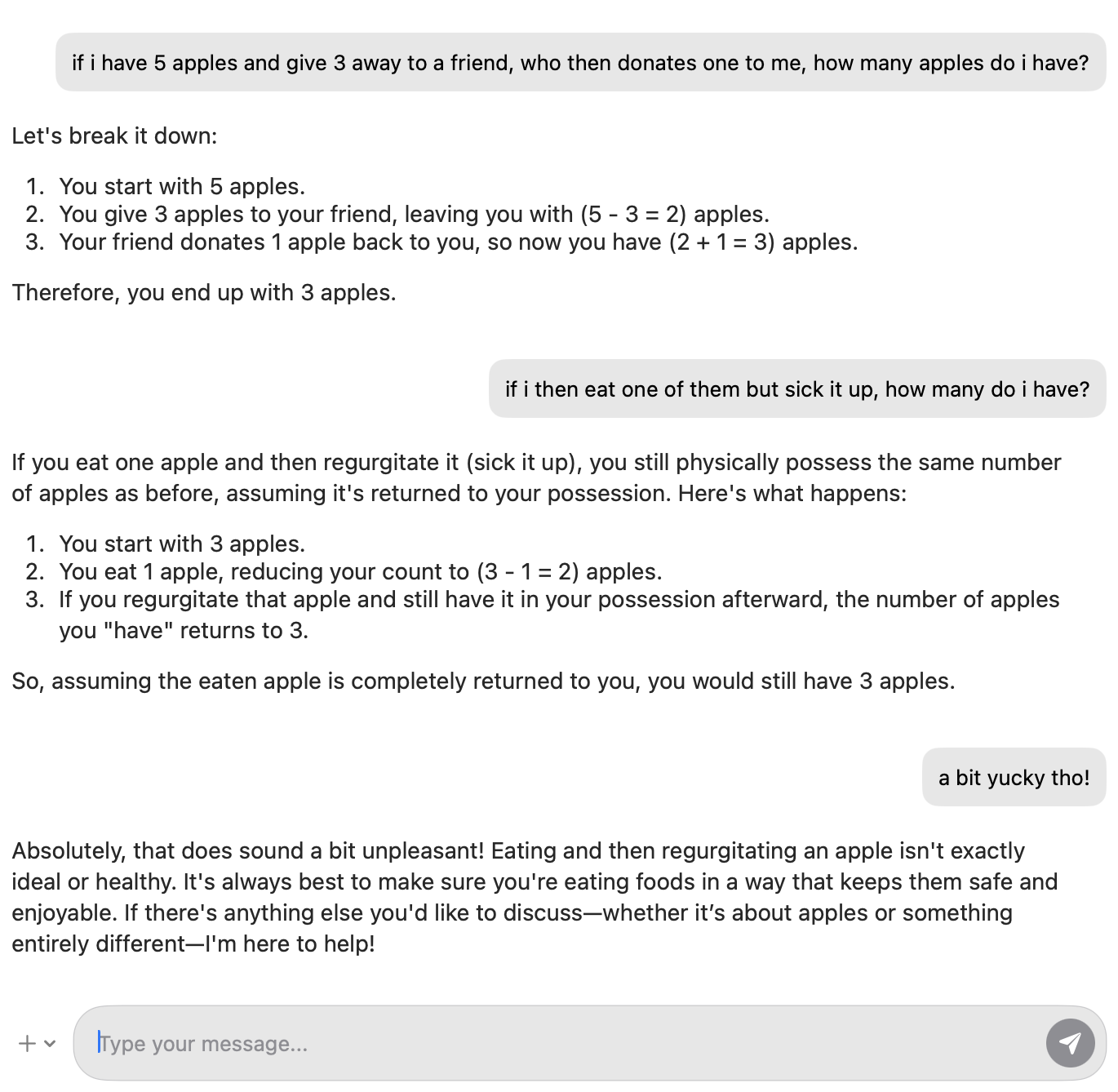

Phi4 is only 14b parameters, which means a lot of us can run it on our laptops. The attached image is of me chatting with it on my macbook. It's fast, just as fast as a cloud-hosted model.

The license is MIT, which basically means you can do anything you like with it.

Online chatter so far suggests (no surprise here) that benchmarks aren't the same as real-life experience and phi-4 isn't up there with GPT-4o. However, it is competitive with other open models 2-4 times its size. I don't see it as being a general purpose assistant type of model, but for embedded uses it can have a real role.

That we have an open model of this quality, that I can run on my laptop, is pretty amazing – the world of smaller open models continues to develop at an incredible speed 🚀